The algorithm tries to identify important features through repeated random sub-sampling cross validation (CV). In each CV, two thirds (2/3) of the samples are used to evaluate the importance of each feature based on

- VIP scores (feature selection using PLSDA),

- Decreases in accuracy (feature selection using Random Forest), or

- Weighted coefficients (feature selection using Linear SVM)

The top 2, 3, 5, 10 …100 (max) important features are used to build classification/regression models which are validated on the 1/3 the samples that were left out.

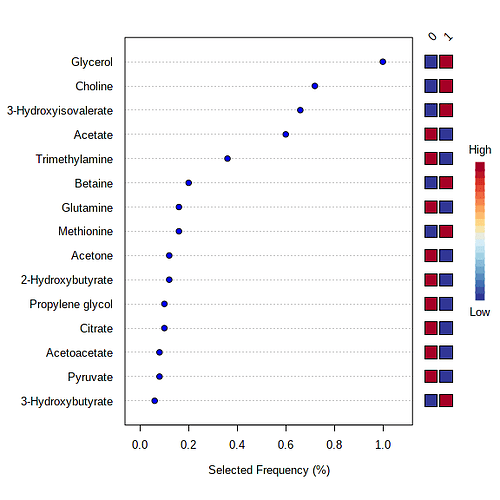

The significant features are ranked by their frequencies of being selected in the models (see the Fig. below)